PitchNet: Unsupervised Singing Voice Conversion with Pitch Adversarial Network

Introduction

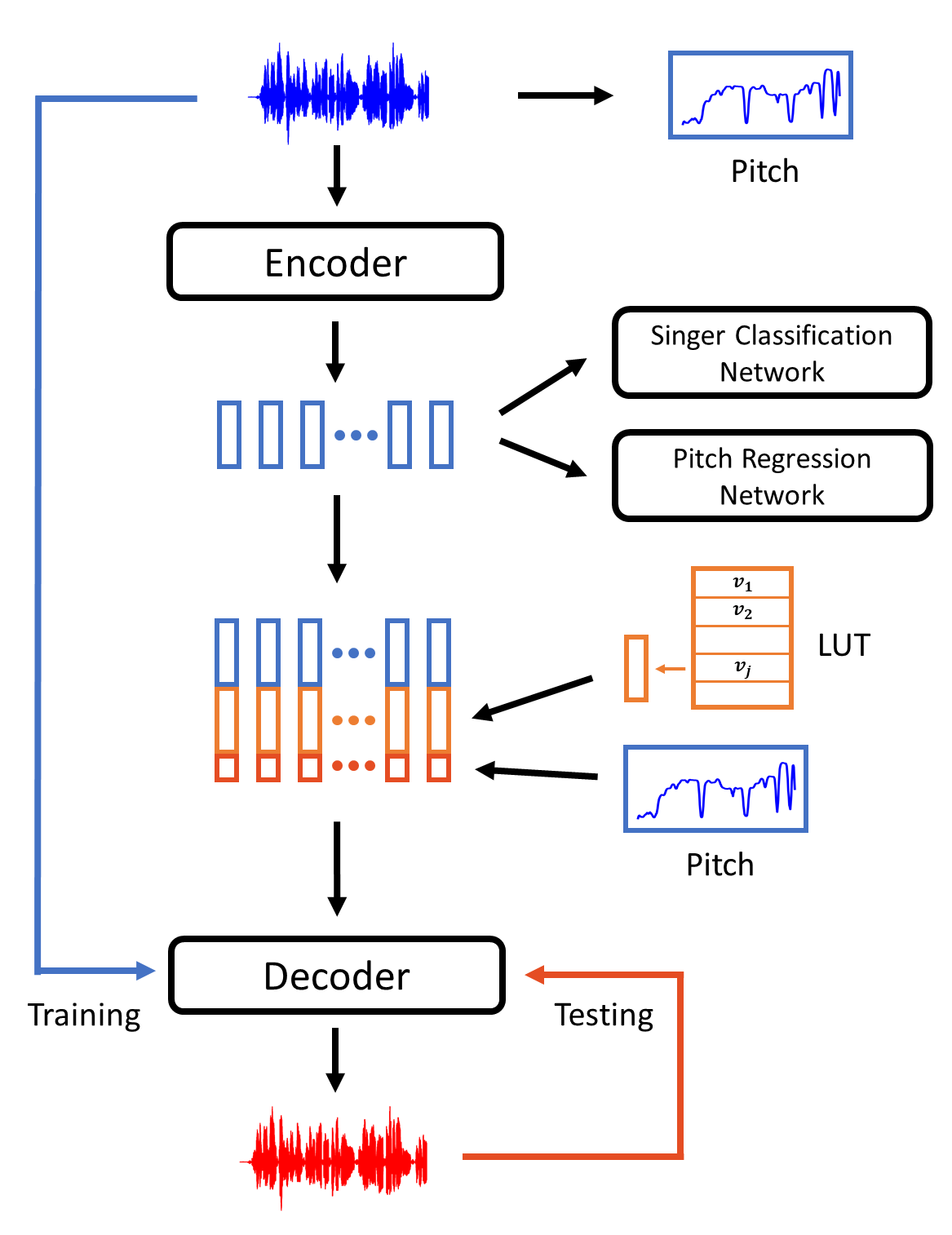

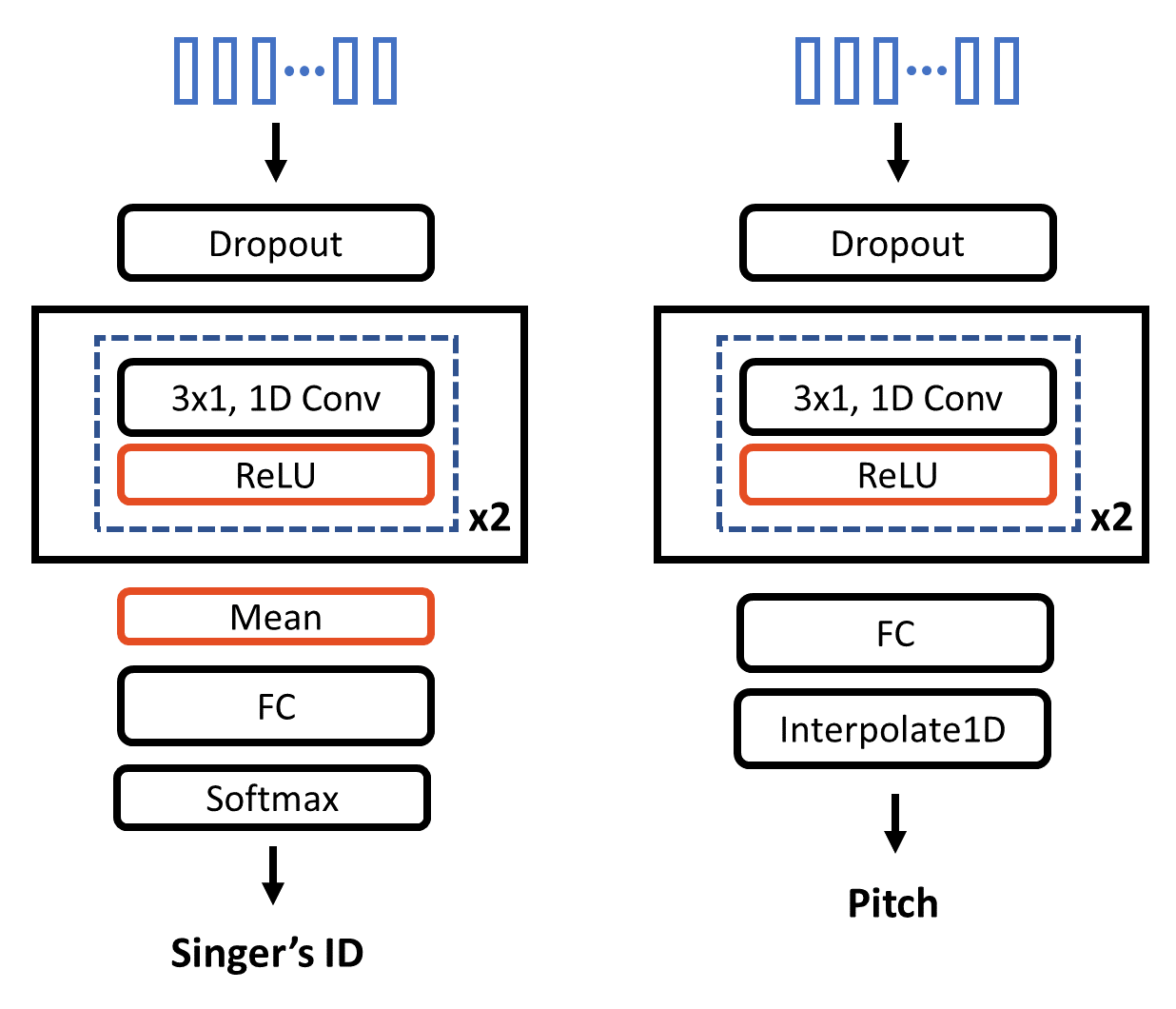

Singing voice conversion is to convert a singer's voice to another one's voice without changing singing content. Recent work shows that unsupervised singing voice conversion can be achieved with an autoencoder-based approach [1]. However, the converted singing voice can be easily out of key, showing that the existing approach can not model the pitch information precisely. In this paper, we propose to advance the existing unsupervised singing voice conversion method proposed in [1] to achieve more accurate pitch translation and flexible pitch manipulation. Specifically, the proposed PitchNet added an adversarially trained pitch regression network to enforce the encoder network to learn pitch invariant phoneme representation, and a separate module to feed pitch extracted from the source audio to the decoder network. Our evaluation shows that the proposed method can greatly improve the quality of the converted singing voice (2.92 vs 3.75 in MOS). We also demonstrate that the pitch of converted singing can be easily controlled during generation by changing the levels of extracted pitch before passing it to the decoder network.

Model Architecture

|

|

Audio Samples

Singing voice of VKOW:

| Target | Nachmani et al. | Ours |

|---|---|---|

| JLEE | ||

| JTAN | ||

| KENN | ||

| SAMF | ||

| ZHIY |

Singing voice of JLEE:

| Target | Nachmani et al. | Ours |

|---|---|---|

| VKOW | ||

| JTAN | ||

| KENN | ||

| SAMF | ||

| ZHIY |

Singing voice of JTAN:

| Target | Nachmani et al. | Ours |

|---|---|---|

| JLEE | ||

| VKOW | ||

| KENN | ||

| SAMF | ||

| ZHIY |

Converted results of different pitch as input

* Convert from VKOW to JLEE

Source:

| Input Pitch | Output |

|---|---|

| pitch x 1.0 | |

| pitch x 0.7 | |

| pitch x 1.2 |